Digital library of construction informatics and information technology in civil engineering and construction

Digital library

Paper: w78-2010-24

| Paper title: | Metrics for the Analysis of Product Model Complexity |

| Authors: | Ulrich Hartmann, Petra von Both |

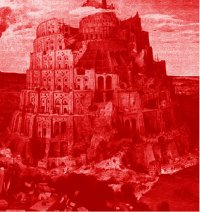

| Summary: | Today we see several product model standards getting more and more corpulent by absorbing concepts of neighboring domains, but behind the good intentions of getting ‘complete’ the perils of complexity are lurk-ing. Raising computer power gives us the means of handling large digital models, but the overall situation resembles the scenery of the mid-1970ties, where the software industry ran into the so-called software cri-sis. Edsger Dijkstra put it quite bluntly: “as long as there were no machines, programming was no problem at all; when we had a few weak computers, programming became a mild problem, and now we have gigan-tic computers, programming has become an equally gigantic problem” . Pursuing traditional concepts with growing tool power may uncover structural deficits not anticipated before.It’s in the nature of complexity to have no single ‘magic’ number, representing the complexity of a general system, at hand. The comparison of systems complexity on a universal level is therefore next to impossible by definition. Models -and in our case product models- are an abstraction of the system they represent, re-ducing concepts of the real world to the necessary minimum. Complexity analysis on the reduced set of con-ceptual model elements can therefore be conducted down to a numerical level. Metrics for the assessment of software complexity and design quality have been proven in practice. The article gives a brief overview on complexity metrics, how to apply them to product models and possible strategies for keeping model complexity on a reasonable level. Different model standards will be analyzed, separating between logical complexity inherent to the problem domain and formal complexity imposed by the model notation. Due to the metrics presented, views on complexity can be structural, behavioral, quan-titative and even cognitive. As a conclusion, a line can be drawn between different aspects of model com-plexity and potential model acceptance. |

| Type: | normal paper |

| Year of publication: | 2010 |

| Keywords: | product model, complexity, metrics, IFC, CityGML |

| Series: | w78:2010 |

| ISSN: | 2706-6568 |

| Download paper: | /pdfs/w78-2010-24.pdf |

| Citation: | Ulrich Hartmann, Petra von Both (2010). Metrics for the Analysis of Product Model Complexity. CIB W78 2010 - Applications of IT in the AEC Industry (ISSN: 2706-6568), http://itc.scix.net/paper/w78-2010-24 |

inspired by SciX, ported by Robert Klinc [2019]